If you’re looking to integrate local LLM like deepseek-r1 with Cursor, you’ve come to the right place! In this guide, you’ll learn how to leverage Ollama for a setup that’s not just powerful and private, but also easy on your wallet. No more usage limits or unexpected bills - just pure, local AI assistance at your fingertips.

Prerequisites

Before we dive in, you’ll need two essential tools:

- Ollama - Your local LLM server

- Ngrok - For free and secure tunneling

Getting Started

First, head over to Ollama’s website and download the latest version. While that’s installing, grab Ngrok from their download page after creating a free account.

Setting Up Ollama

Now, let’s start by pulling the deepseek-r1 model. Open your terminal and run:

ollama pull deepseek-r1Once downloaded, fire up the Ollama server in the background:

OLLAMA_ORIGINS=* ollama serve > /dev/null 2>&1 &Creating a Secure Tunnel with Ngrok

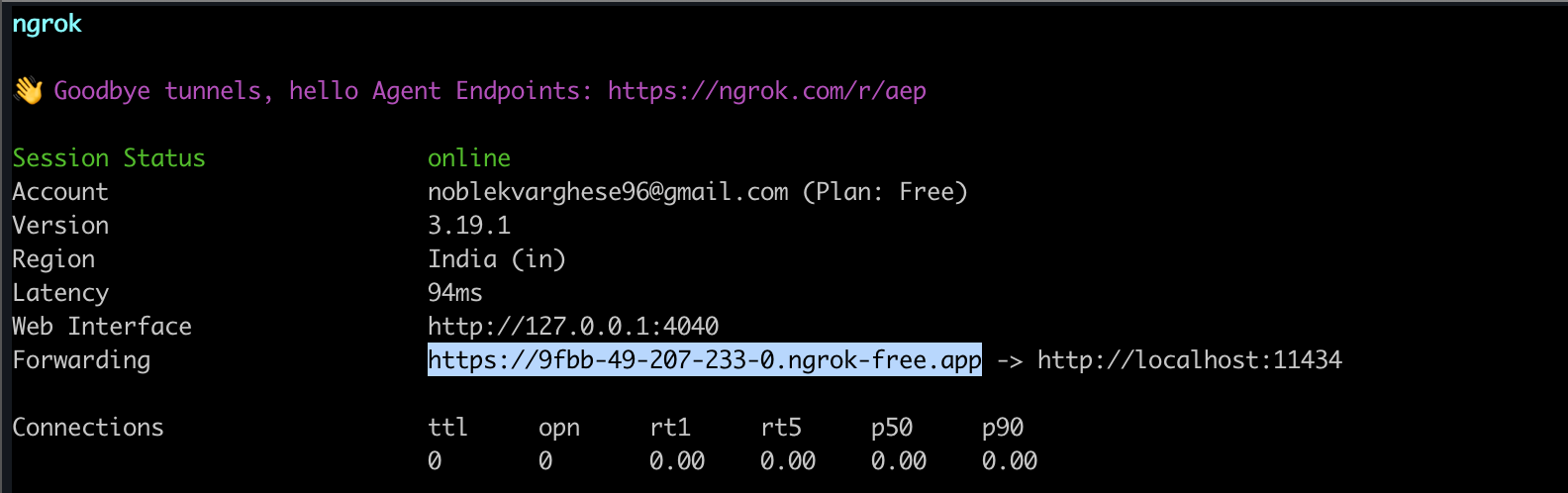

Now for the interesting part - we’ll use Ngrok to create a secure tunnel to your local Ollama server:

ngrok http 11434 --host-header="localhost:11434"Security Note: Keep your Ngrok URL private! If it ever gets compromised, simply restart the Ngrok server to generate a new URL.

Configuring Cursor

Here’s where everything comes together:

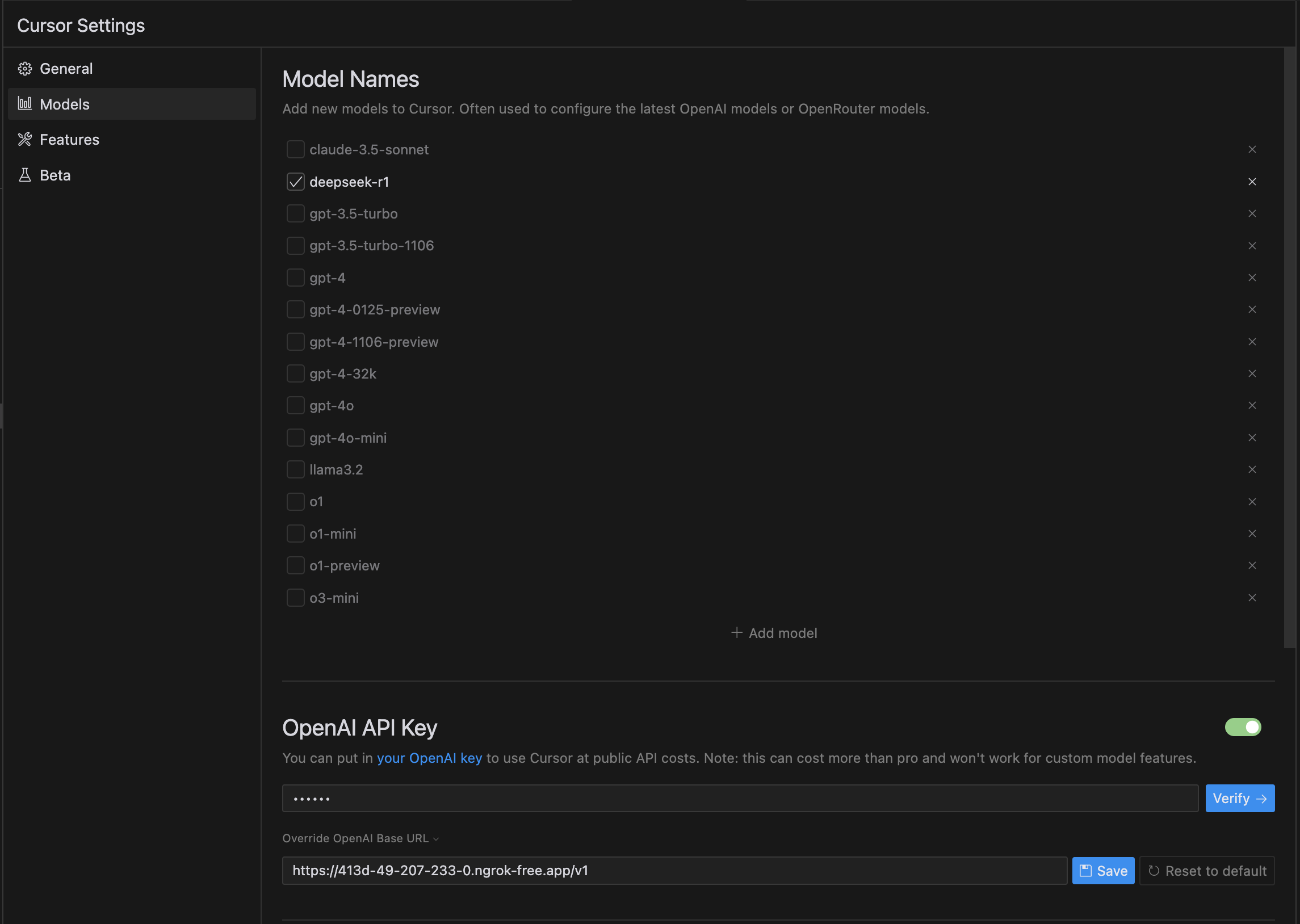

- Copy your Ngrok URL

-

Open Cursor settings, find the OpenAI Base URL field. Paste your Ngrok URL and append

/v1(e.g.,https://ollama.ngrok.app/v1)

-

In the model menu, add your model name (in this case,

deepseek-r1) and select the model. Ensure the name of the model is the same as the Ollama image name. -

Set the OpenAI key to

ollama

And voilà! You’re now running Cursor with your very own local LLM. No more dependency on cloud services, complete control over your AI environment, and better privacy - what’s not to love?

Remember, local LLMs might not be as powerful as their cloud counterparts, but they offer unmatched privacy and customization options. Perfect for development, testing, or when you need to work offline.

While this guide helps you set up local LLMs with Cursor, please note that the Cursor Tab functionality will not work with this setup. The Cursor Tab uses Cursor’s own custom model and is exclusively available as part of their Pro features. This integration only works with the command palette and other basic AI features.

Happy building! 🚀